Seeing the Threat Sooner

Getting Left of Bang with AI

I recently sat down with ZeroEyes co-founder Sam Alaimo for a podcast episode where we talked about what it takes to get left of bang and prevent violence.

This article expands on that conversation and discusses how AI tools can fit into a threat prevention program.

Want to learn more? Join us for our upcoming webinar, “Seeing the Threat Sooner: Getting Left of Bang with AI.”

Introduction

You can’t prevent an attack you never see coming.

This instinctive, straightforward principle has long guided safety and security professionals seeking to improve their protection and violence prevention programs.

Achieving early recognition of potential threats guided the creation of the Marine Corps’ Combat Hunter Program in 2007 to help Marines, Soldiers, and Sailors get left of bang overseas. It’s the same mindset that guides schools, businesses, and cities working to prevent violence today.

What’s changing today is the technology available that’s making this goal more attainable than ever.

The rise of artificial intelligence in security represents more than just a technical upgrade. It’s a tool that enables an operational shift, changing how quickly organizations can detect potential threats, prompting a human to validate them and act before harm occurs. For professionals who have long been working to stay left of bang, this is one of the most exciting developments in years.

Extending the Time and Space for Action

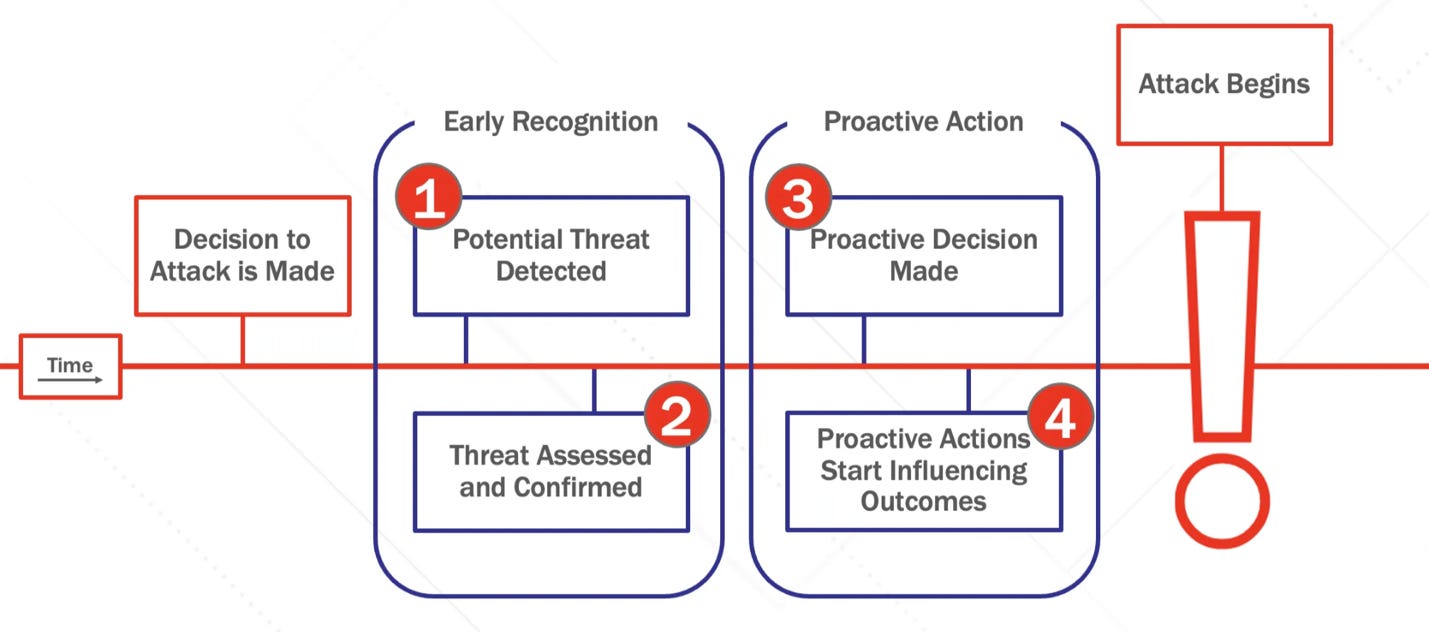

At its core, getting left of bang is about creating time and options before an incident begins by completing four steps between the moment a person decides to attack and when they act.

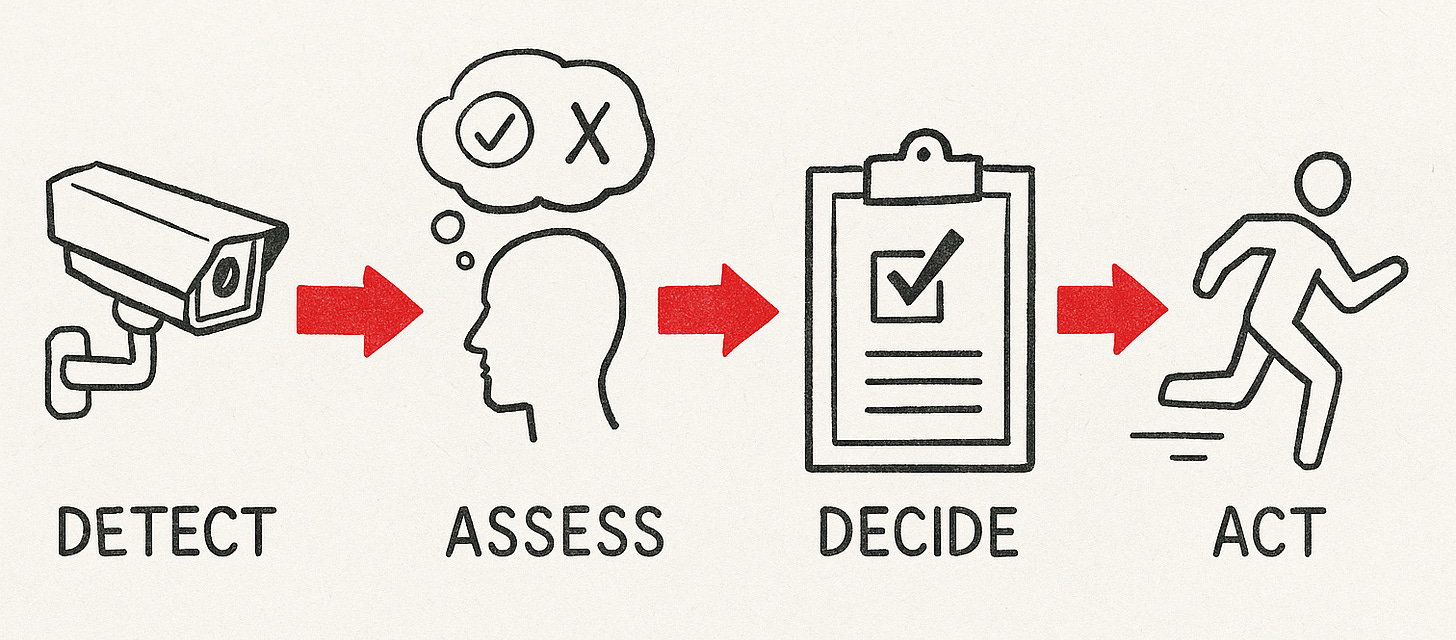

To be able to get left of bang, an organization needs to be able to:

Detect potential threats early. Use people, sensors, networks, and situational awareness to spot pre-event indicators of violence.

Assess the threat and determine if it is credible. Quickly evaluate whether an alert or behavior is valid and worth acting on, or if it is a false positive, avoiding both paralysis and overreaction.

Decide on a course of action. Once a threat is confirmed, determine how to respond to prevent the attack or influence its outcome.

Act with enough time to influence the outcome. Deploy resources early enough to change how events unfold.

AI-enabled cameras strengthen this process by expanding the area that can be observed. Unlike traditional security cameras that primarily record events, AI-enabled cameras can detect patterns, identify potential weapons, and alert teams before a human eye might notice. By continuously scanning hallways, parking lots, building entrances, and approach routes for weapons, they provide additional time for safety and security professionals to intervene.

When a potential threat can be detected seconds or minutes sooner, people on the ground gain the opportunity to position themselves effectively, confirm or disprove what the system sees, and act before “bang.”

But it’s essential to recognize that while the process ends once someone intervenes and stops the attack, it can’t begin without that first recognition of a potential threat. Recognition is the gateway that every left of bang act has to pass through.

For a school resource officer, a corporate security team, or a local police agency, that extra time can be the difference between reacting to an attack and successfully stopping one before it begins.

By automating observation across a larger space, cameras can reduce blind spots, free personnel to focus on intervention rather than monitoring, and provide the prompt that drives the rest of the system to act. This is where AI’s presence creates real value—helping to see what humans can’t, and doing it without fatigue or distraction.

But technology alone doesn’t decide what to do next. That responsibility still belongs to the organization that must interpret, coordinate, and act on what the system sees.

From Detection to Decision: How Organizations Must Evolve to Keep Pace

The introduction of AI into security operations doesn’t just change how much an organization can see. It may also change how decisions are made once something is seen.

Traditionally, threat recognition has been an individual skill where the same person who noticed a potential threat was often the one responsible for responding to it. Integrating AI into safety and security operations shifts that dynamic, demanding an organizational system of awareness—one in which a camera identifies a potential threat, another team assesses the alert, and yet another group responds.

While that system of awareness creates new opportunities for early intervention, it does demand specific organizational capabilities. When recognition and response are separated, the organization must evolve how it receives, shares, and articulates information to ensure that there are no delays as it moves from a third-party operations center to an organization, and then to the person tasked with the assessment and intervention.

Unfortunately, there have been recent incidents in which a potential threat was detected, but coordination and decision-making failed in the follow-up steps. There have also been instances in which responding professionals bypassed the assessment process altogether and jumped into action prematurely.

AI tools can point teams in the right direction, but they can’t tell professionals what they should do or how they should act when they arrive on scene. Recognizing that alerts may include false positives and that there are often legitimate reasons for someone to be carrying a firearm or displaying behavior that attracts our attention, responders must be trained to make sense of the situation, validate what they see, and take appropriate action.

This is where training, policy, and leadership intersect to turn technological potential into real capability. It’s built through:

Training and trust, so responders know how to interpret AI alerts with confidence.

Policies and thresholds, so the handoff from detection to decision is clear and consistent.

Coordination and communication, so detection leads to timely, unified action.

Accountability, so ownership of each decision remains human and not automated.

The information gathered by AI-enabled cameras can enhance situational awareness, but it’s still up to the professionals on the ground to interpret that prompt and decide how to act. Those individuals remain accountable for their decisions, and the organization is responsible for ensuring they’re prepared to make them.

AI expands awareness, but only an evolved organization can turn that awareness into informed and effective action.

Final Thought

No technology will identify every attacker. Some weapons are never visible. Some attackers never give off behavioral cues. But by extending both how far we can see and how soon we can act, AI changes the equation in favor of prevention.

And these systems will only improve over time. As databases expand, as models learn from false positives, and as operators become more skilled at interpreting the prompts, the tools will grow sharper and more reliable.

This technology has set a new performance baseline, allowing professionals to spend more time where it matters most: close to the people and places they protect. That’s why the conversation about AI in security must also be a conversation about organizational readiness. Technology sets a new standard for awareness, but it’s leadership, training, and structure that turn that awareness into capability. The more effectively those pieces work together, the farther left of bang an organization can stay.

The goal now isn’t just to detect danger earlier. It’s to ensure that every organization is prepared to act on what it sees. Because the real advantage of AI isn’t its ability to recognize threats—it’s the opportunity it gives us to shape what happens next.

Enjoyed This Article? Pass It On.

If this article sparked ideas, share it with your network, a colleague, or on social media. Sharing is how we expand the community of professionals committed to staying left of bang.

AI to accelerate threat detection is a positive innovation. The failure of human intelligence to process and act effectively on emerging threat data (your steps 2-4) remains a tough challenge. I wrote about that in relation to school violence here:

https://open.substack.com/pub/bairdbrightman/p/our-schools-should-be-safer-by-now?utm_campaign=post-expanded-share&utm_medium=web